Two Problems With Scientific Research

- JYP Admin

- Nov 7, 2020

- 5 min read

Updated: Oct 8, 2023

Author: Arpan Dey

It is highly likely that you have heard this question sometime: "Is most published research false?" Yes and no.

Jordan Ellenberg says in his book: “How Not To Be Wrong: The Hidden Maths Of Everyday Life,” suppose you visit a hospital and examine the gunshot cases. You find that about 90% of the shots had hit the legs, while only 10% of the shots had hit the chest of the patient. If you are asked where one is more likely to get shot, you would say in the leg, although the answer can be in the chest as well. It would be incorrect to assume that the leg is more vulnerable to gunshots than the chest. This is simply because most of the people who got shot in the chest did not even make it to the hospital. If you want to determine where you are most likely to get shot, you must take into consideration all the people who got shot, and not just those who made it to the hospital. Thus, you should always keep the big picture in mind.

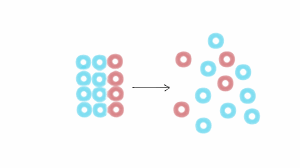

Just like you were missing out the people who did not make it to the hospital in the above example, scientists ‘intentionally’ miss out on such data as well. Scientists have to publish papers, and most journals only accept papers whose results are statistically significant. But statistics is a highly dangerous tool. As Ellenberg says, there is a 1/20 chance of getting results that match with your prediction, although the result may be of no real significance. Suppose scientists want to find out whether jelly beans cause acne. Initially they find that there is no link at all. Then they try jelly beans of different colors. Let us say they choose 20 colors. By pure chance alone, one of them (say, green) displays some link with acne. But math tells us the results will be statistically significant in at least one case by pure luck. However, the headlines on the paper next day would be something like: “Link found between green jelly bean and acne; statistically significant results,” etc.. This is enough to convince all laymen and even some scientists. Now suppose that the green jelly bean is tested 20 times by 20 different scientists in 20 different laboratories. 19 of the scientists find no statistically significant effect. They hush up, since they know that no journal is going to publish their findings. The other scientist’s results agree with the already published results, and he trumpets the fact. This is why much of published research involving statistical analysis is questionable. Selective publication can give rise to a lot of unnecessary problems and misconceptions. For instance, a group of scientists claimed to have found a secret code in a book, and to have proved the existence of God. However, almost all such results become insignificant if the experiment is repeatedly carried out in an unbiased manner.

What determines whether the results are statistically significant or not is the null hypothesis. Your data must not only be consistent with your predictions, but they also must be inconsistent with the negation of your predictions. To quote Ellenberger, “If I claim I can make the Sun come up at dawn with my mind, and it does, you should not be impressed by my powers; but if I claim I can make the Sun not come up, and it does not, then I have demonstrated an outcome very unlikely under the null hypothesis, and you would best take notice.” Suppose you want to prove that there is some relation between A and B. Then, your null hypothesis will be that there is no relation between A and B. Your results will be statistically significant only if the null hypothesis can be safely rejected. A null hypothesis is rejected only if the observed data is significantly unlikely to have occurred if the null hypothesis were true. In null hypothesis significance testing, the p-value is the probability of obtaining test results at least as extreme as the results actually observed, under the assumption that the null hypothesis is correct. A very small p-value means that such an extreme observed outcome would be very unlikely under the null hypothesis. A p-value lesser than about 0.05 means that the result is statistically significant. However, scientists (both intentionally and unintentionally) tweak and ignore circumstances to get the desired p-value, as they inwardly know that their results must be statistically significant to be accepted for publication in most journals.

Thus, we come back to the same problem. We do not see the big picture. Just because 90% of the patients in the hospital have been shot in the leg, we cannot conclude that the leg is more vulnerable to gunshots. Another example Ellenberg gives to emphasize this point is this. Suppose you receive 10 emails from a stockbroker, predicting, correctly each time, the rise or fall of stocks. You would be tempted into subconsciously believing that his next prediction is also likely to be correct. However, let us look at the big picture. The stockbroker emails 10000 people. To 5000, he says that the stock would collapse; to the other 5000, he says that the stock would rise. Either of the predictions must be true. He then divides those 5000 people who received the correct prediction into two halves, and emails them again. To the first half, he says a certain stock would collapse; to the rest, he says that it will rise. If this process continues 10 times, he can get 10 predictions in a row correct to at least a few people. Those people think that he is always correct about such predictions, only because they are unaware of the thousands of other predictions that failed. (And those people who receive incorrect predictions do not receive any further emails. So they just forget it.) That is the problem. In science, we are unaware of the thousands of ‘statistically insignificant’ results that never made it to the journals.

Now, let us come to the second problem. Regression to the mean. This phenomena has been observed in businesses, hereditary traits, etc.. Things always have a tendency to move toward the mean. The best will worsen with time, and the worst will improve toward the average. If your parents are very tall, you are likely to be taller than the average, but not as tall as them. Why? Since heredity alone cannot justify being very tall. Your parents are very tall. Not just taller than the average, but way more taller than the average. Your grandparents being tall alone cannot justify this. Luck, environment and circumstances must have played a part as well. But it is very unlikely that you will get lucky the exact same way like your parents and end up as tall as them. Similarly, if your parents are very short, you are likely to be shorter than the average, but maybe not as short as them. Tall parents do not go on producing taller offspring infinitely. Over time, with more and more generations, the offspring will move toward the average height. You are tall, but not as tall as your parents. You children will be shorter still. Your grandchildren will be even shorter. But if they get too short, their children will gradually grow taller than them. You will move downward if you are above the mean, or upward if you are below.

Suppose you develop a drug to treat obesity and test it on ten extremely obese people. I said, extremely obese, not just obese. As regression to the mean predicts, after some time (maybe a few months or years), these people will definitely lose weight, even if by a little amount. Although you would want to believe that your drug worked, that may not be the case. It is impossible to determine whether your drug was really that effective. Thus, the problem, in context of scientific research, is clear.

Bibliography

Ellenberg, Jordan. "How Not To Be Wrong: The Hidden Maths Of Everyday Life". Penguin.

.png)

Comentários